I couldn’t help myself - I posed simple data related questions to chatGPT. I was curious if the model could interpret data, reason about causation, and consider what actions to take.

Like the original formulation of the Turing test, I was interested to see if it could “imitate” a thoughtful analyst. Every data analyst has worked with folks in the business who get concepts like “statistical significance” and “correlation, not causation” - and, to be reductive, successfully imitate the analyst.

Every data analyst has also experienced instances where data is mis-interpreted (willfully or otherwise), leading to wrong conclusions.

So, I was genuinely curious where chatGPT landed.

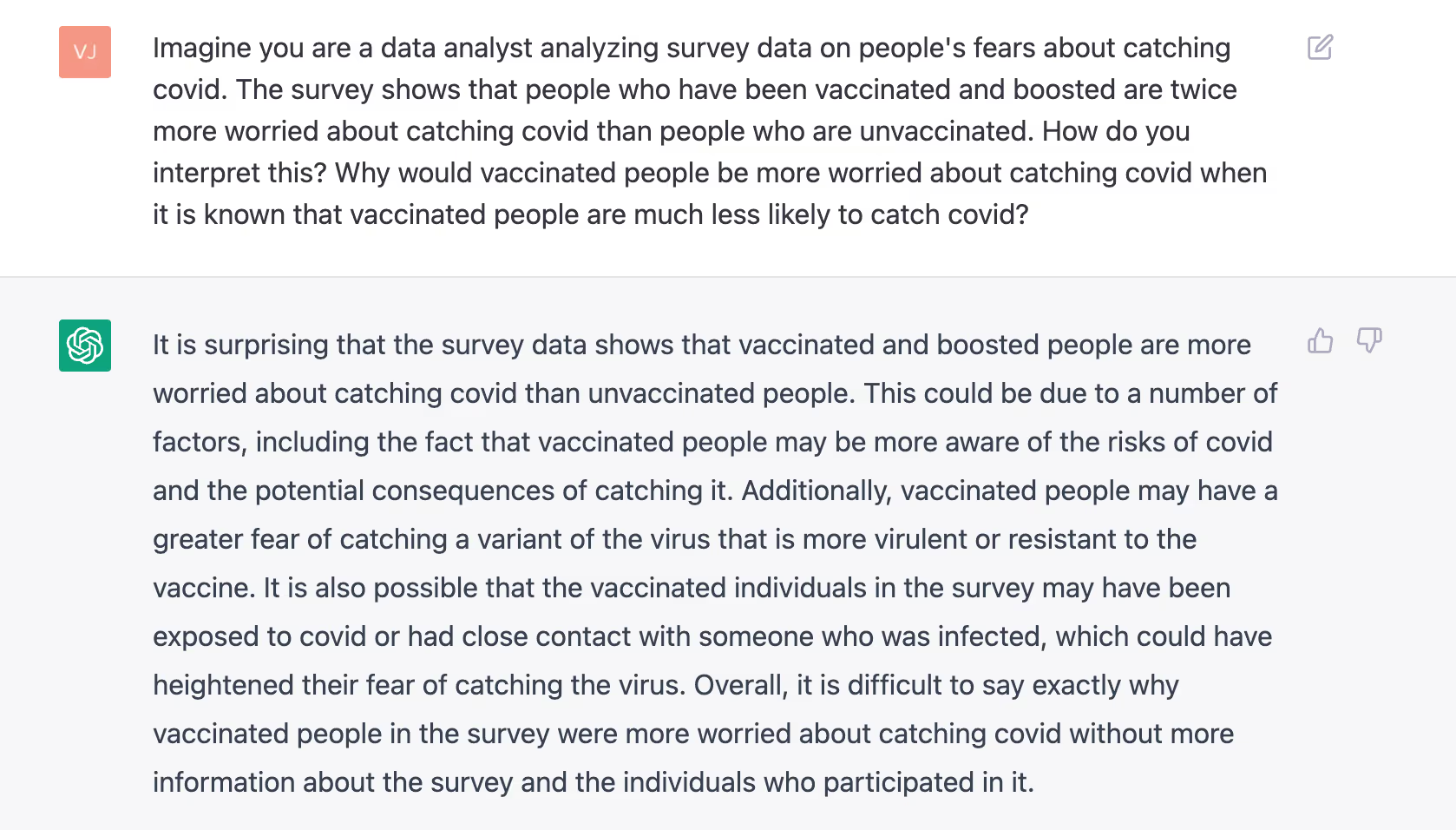

I first posed a simple question about a survey which showed that vaccinated people are more likely to be worried about Covid-19 - could it hypothesize that it’s precisely because of their increased worry that these people got vaccinated?

chatGPT’s response hints at this causation equation at the beginning but it’s not explicit. It also throws out a surprising, valid hypothesis that folks who are vaccinated could have been exposed to someone with Covid - a common causal factor for both the increased worry and the vaccination - but overall, like a true analyst, the response is thought out and well caveated - a strong imitation game for sure.

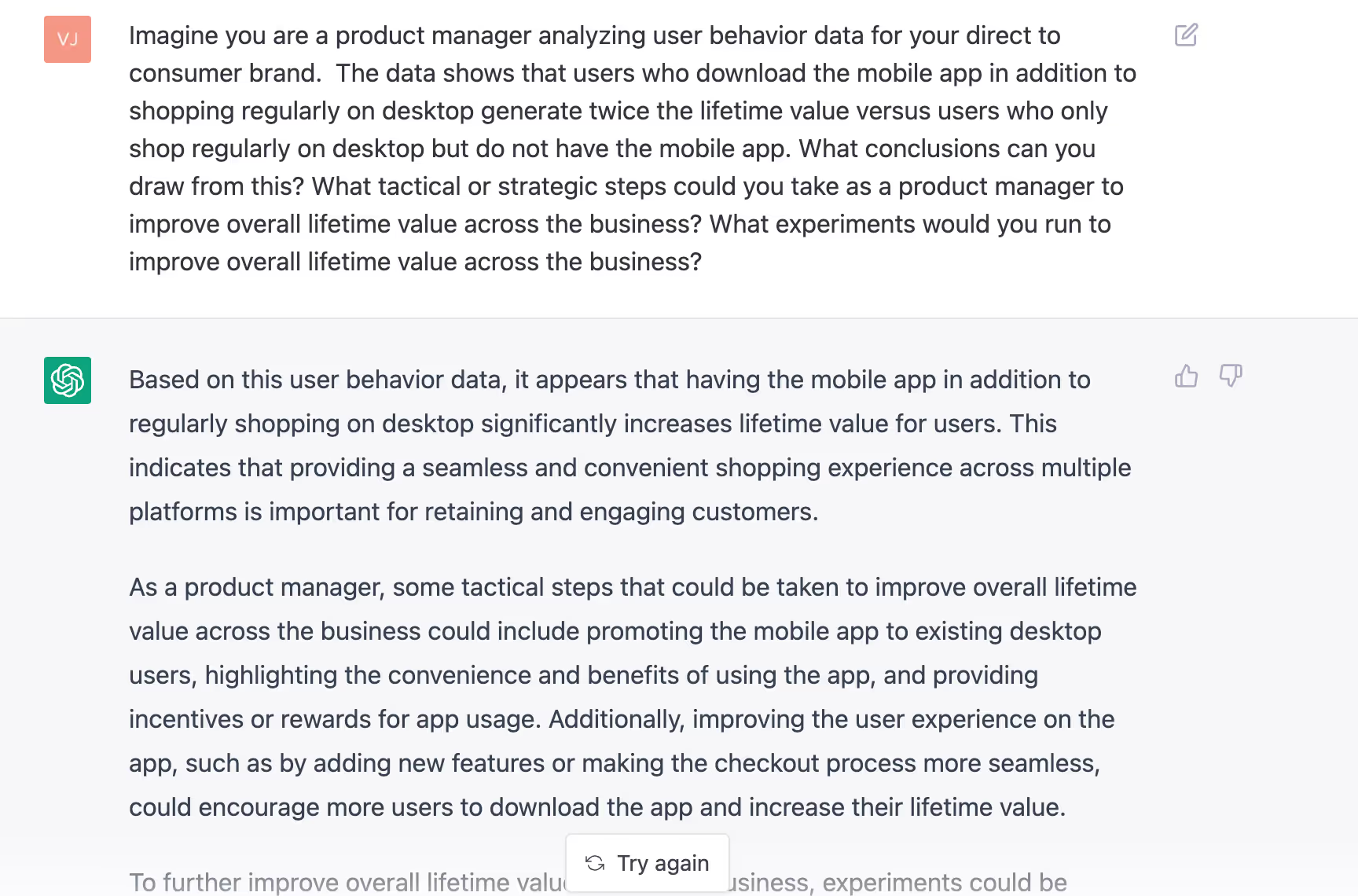

Then, I posed a real world business problem - I asked it to role-play as a product manager (PM) in a D2C company, and to interpret the data that mobile app users are twice as loyal as non-app users. I asked what it meant and what actions would it take as a PM. The response was good - it laid out how the app experience could be improved to further incentivize users to try the app, and it mentioned how incentives/promotions could be offered to drive users to the app.

But, it completely missed the possibility that causation could be reversed - that more loyal users download the app more. But, isn’t this just typical of us?

The promotions response offered a data-related reframe - I asked if it’s the right decision to provide promotions to drive users to the app, and how to decide the promotion level? The answer got a bit vague - it basically said that steeper promotions could erode revenue and profitability and that we should carefully consider the decision.

I probed further and asked for specifics around calculations and metrics to assess the promotional impact - and the answer remained a bit vague. And it threw in metrics to consider like user engagement. Again, on this point, it definitely imitated us well - adding vanity metrics to the consideration set 🙂

My quick jump-to-conclusions take is that the model is very good at boilerplate conversations and content - and I do see a wide range of use cases assisting or even replacing humans in writing messages, emails, summaries, docs, code, maybe even some fictional prose and poetry. I think it will expose how much of our day to day writing and communication is boilerplate, even trite - that can be automated away to refocus our time, and elevate the human aspects of our communication to another level. That’s the bull case.

But when it comes to reasoning and decision-making, i’d say there’s a long way to go. But, hey, this was a super small sample (not statistically significant!).

Data analysts, data scientists, and decision-makers on the ground - feed this model your real-world data interpretation and reasoning use cases. And please do share in the comments whatever you find!