The perennial problem in the data world is the tension between consumer flexibility and governed consistency.

This is now under tremendous strain as consumers and use cases expand while data teams operate under tight personnel and time constraints. Governed pre-defined datasets has its advantages. The more we pre-join, pre-filter, pre-aggregate, the more we guarantee consistency across the organization. Two different consumers are likely to see the same metric, preventing confusion and hours of organizational time reconciling.

But, this comes at a cost: end consumers now have a harder time getting to the right shape and size of data they want. And there is a secondary issue - as more logic gets solidified in code by upstream data and analytics engineers, it disempowers consumers at the edge who are closer to the the business.

In a single decade, I have watched the evolution from consumers operating on limited, heavily pre-transformed data to flexible, on-demand calculations at the edge, to now reverting back to more pre-transformed data.

Why? Because the modal consumption use case is aggregated metrics inside reporting tools, there is an inevitable push towards pre-transformed datasets. This use case favors consolidating logic upstream, and generating pre-defined datasets for visualization.

But, ironically, as more users get access to consistent pre-transformed datasets, more consumers ALSO want to manipulate atomic data at the edge - to flexibly filter, aggregate, slice, dice - to analyze and operate their functions, to infuse data into their day to day tasks.

Resolving this tension starts with first acknowledging it - some organizations just implicitly accept that serving aggregated metrics consistently is a better investment over empowering consumers to apply logic flexibly at the edge. There are others who are happy to let “shadow” pipelines bloom and to incur the cost of inconsistencies, and even erroneous data and decision-making in the service of democracy.

In either scenario, data teams struggle - in the former, they are a service desk battling requests; in the latter, they play reconciliation cops.

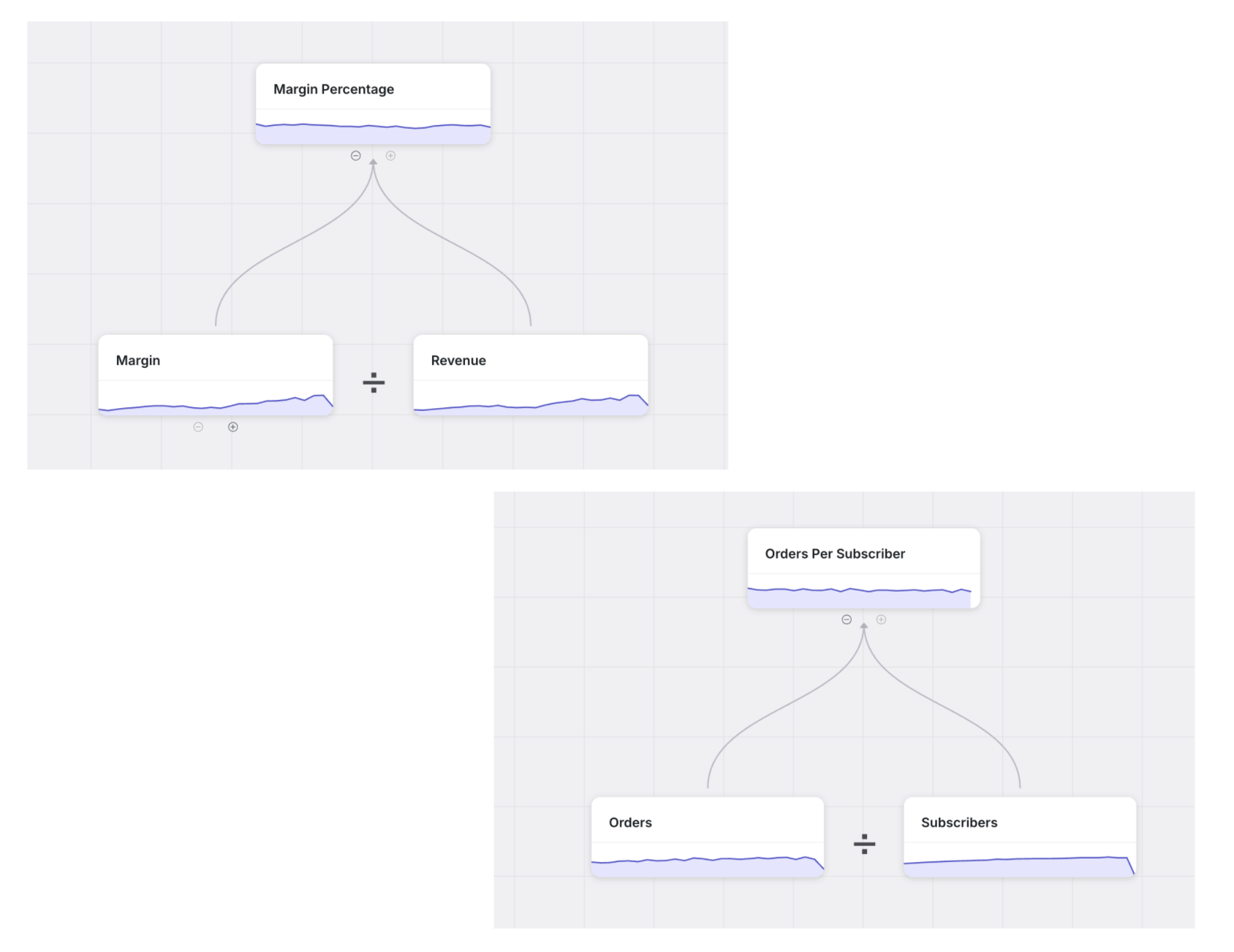

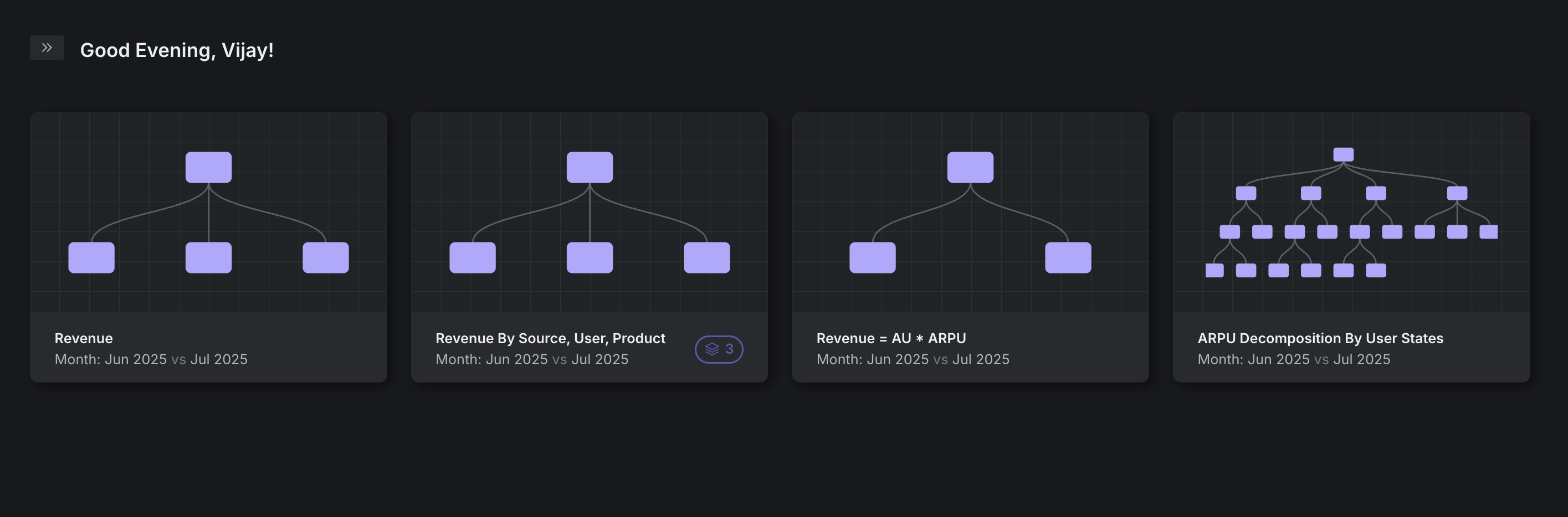

How do we evolve? If data consumers operated, not on pre-transformed datasets, but on a well-defined abstraction that gave them “freedom on governed rails”, we start to move towards the right balance here. This is why I’m excited about the rumblings around metrics and semantics layers.

But, there is a key second step - it is insufficient to expose these emerging abstractions to highly technical users operating in code - we need to truly democratize flexible consumption at the hands of the data analyst, the product manager, the FP&A expert, the logistics specialist, the rev ops expert, and so on. So these consumers get what they need when they need it, and can operate at the business edge with speed and richness of information.

We, at Trace, are excited to be working at the forefront of this.