The debate about data's role, optimal practices, and the utility of tools could be centered around a fundamental insight: analytics is now a dual mode discipline, a fusion of science and engineering.

At its core, analytics is about uncovering and optimizing the input-output causal mechanisms with a business. The primary aim is to operationalize the scientific method within the organizational processes - formulating hypotheses, implementing interventions, and scrutinizing data to either confirm or challenge those hypotheses. This iterative loop of analysis and refinement drives continuous improvement in tactics and strategy.

From this vantage point, doing data is doing science. But, in practice, this process is deeply intertwined with solving engineering problems.

Efficiently building and maintaining data models and ensuring smooth data team collaboration require strong engineering solutions. Even at an atomic level, the efficiency of writing a SQL query matters, just as developers focus on optimizing their coding productivity.

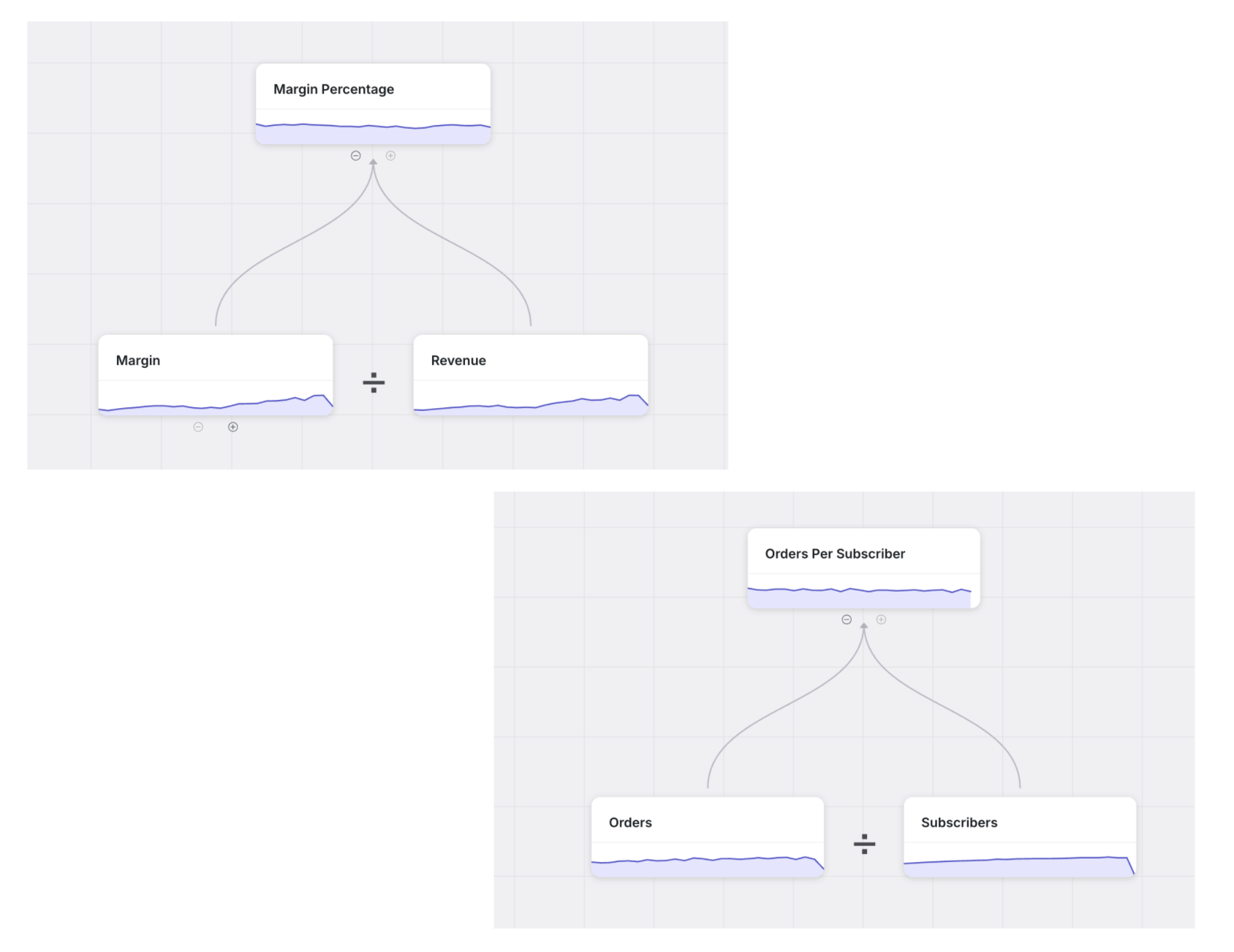

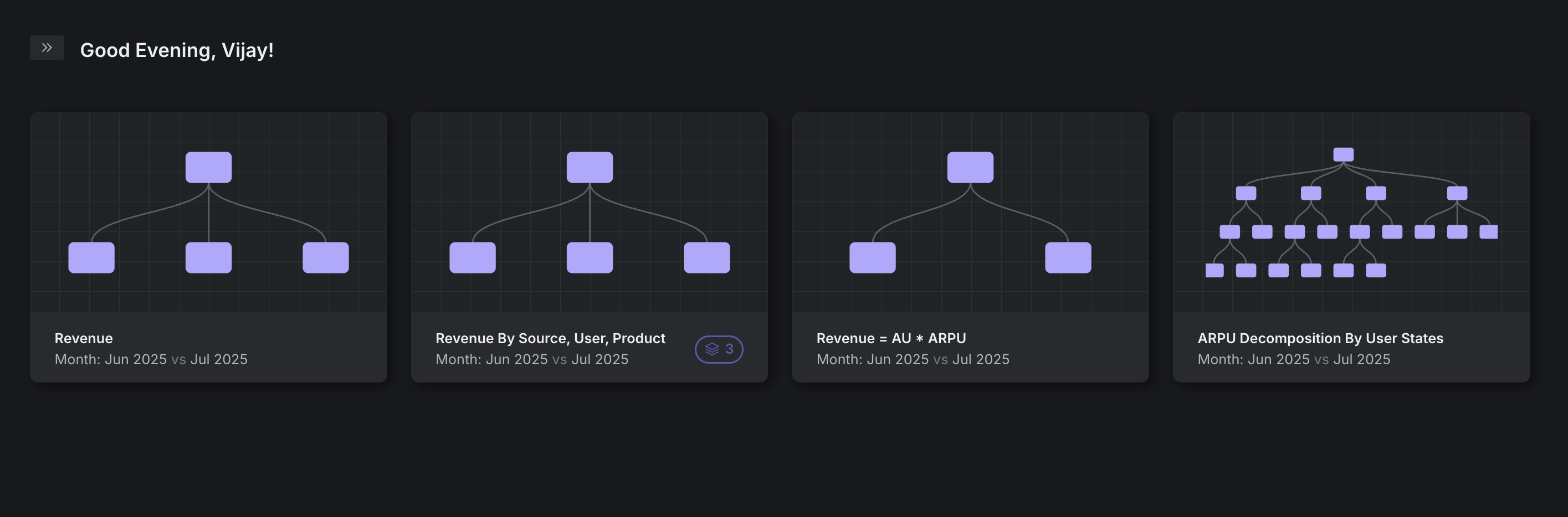

In fact, constructing even stronger abstractions on top of data models like metric trees can revolutionize organizational efficiency by allowing the entire organization to participate in this process without needing extensive knowledge of the data models. This represents the holy grail of “self serve analytics” that many in the industry aspire to achieve.

However, we need to keep front and center the notion that solving these engineering problems is ultimately in service of the scientific method and the ultimate goal of data: to uncover and act upon the causal drivers of the business processes.