In a prior article listing 3 key principles for operating a modern org, I made the point that good tooling has the power to drive sustained, high quality inputs/decision-making. This clearly applies in the data world since the main reason for collecting data is to impact decision-making — but I have been wondering off late if a key force (“let’s engineer the data world”) that animated much of the tooling in the data stack has swung too far and diluted the central purpose.

First, some background. I had a front-row seat as a practitioner to a Cambrian explosion of data tools in the last decade. Just check out the Data Ecosystem chart when it was first conceived and published in 2012 versus the 2021 MAD Landscape version (credit to Matt Turck and his team for a decade of tracking this!). The sheer quantity and specialization of tools is mind-boggling.

Now, this explosion was inevitable. Every company was or still is on the path to being a data company — and the forces that triggered this explosion can be traced to not only data taking center-stage, but the growth of cloud storage/computing (and looser capital flows!). And if these forces set the explosion off, a key force that has shaped a majority of the tooling is bringing engineering principles into the data domain — “engineering the data world” so to speak.

This force is foundationally sound — after all, engineers are at the fore-front of building productivity tools — and ideas like versioning, testing workflows, and code observability are powerful, ecumenical and time-tested. But I have noticed undesirable second order effects as a consequence:

1) due to the specialization of tools, greater fragmentation of workflows which ironically is leading to more tools — data quality, lineage etc.; highly specialized tooling ecosystems work a lot better when engineers are the only collaborators.

2) managing the work in code as the only viable mechanism

- analysts adopting the standard engineering kit — code editors, git, writing pipeline code and tests — some otherwise impactful analysts are pressured to learn new skills and perform new tasks in order to participate

3) a rift between producers and consumers — engineer-like personas own the means of production while consumers polarize the other way

- “no-code” tools are considered a regression for some while it remains the only option for others and these two worlds don’t communicate well

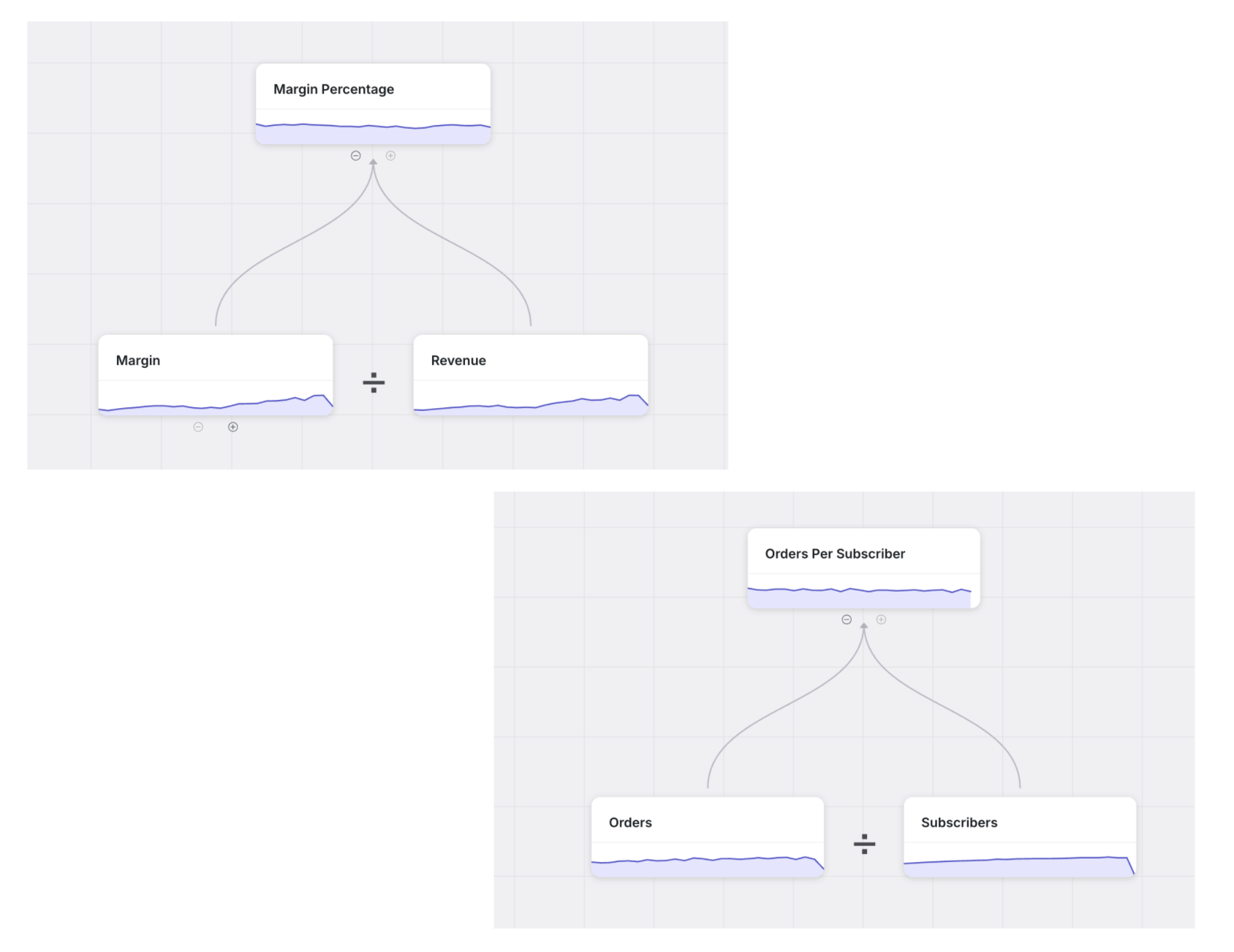

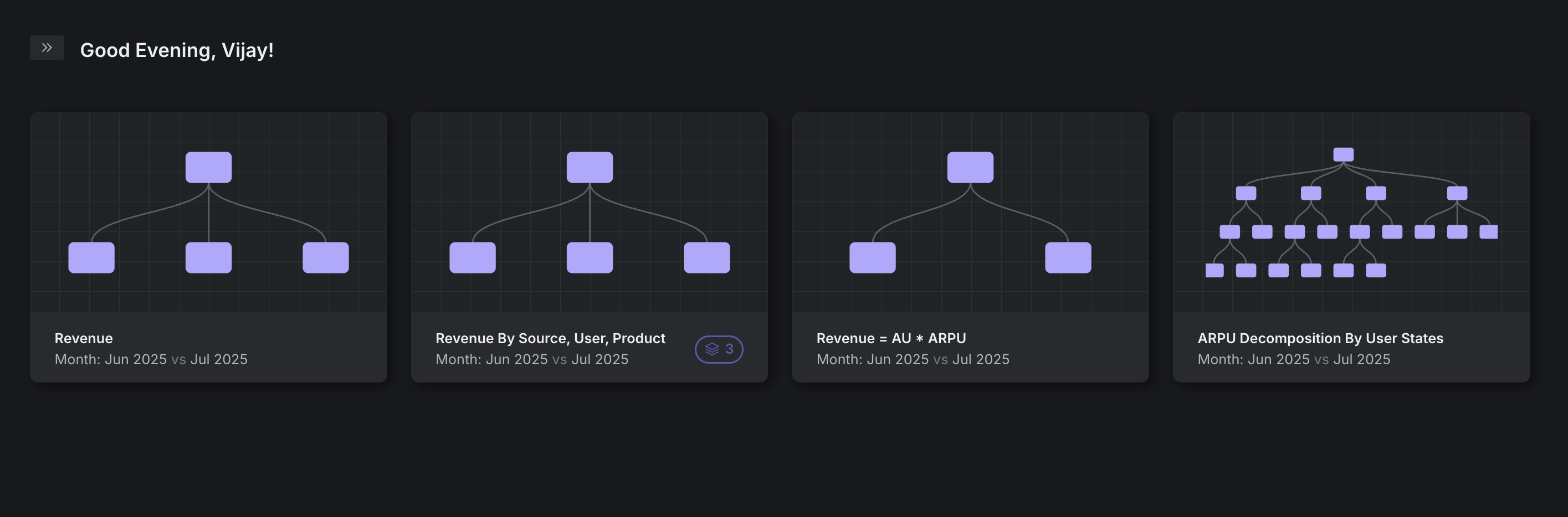

4) in the data world, code is only one piece; data assets, and business logic variations at the consumption edge is where the decision-making power is; well-managed code for a portion of transformations is incomplete

5) some technical analysts pulling back from directly impacting decisions: “my job is to setup the system vs understanding the system”

6) ultimately, a reduction in the quality and volume of hardcore analytical work in favor of automating and monitoring upstream data pipes

Benn Stancil from Mode wrote a thoughtful piece: Work like an analyst — he envisions a world where there is more blurring, more unification between engineers, analysts and the business — well, even to the extreme where an analyst is not a person, but a role.

I’m excited for this world too. But then we have to ask and answer the questions:

- how do we carefully select and apply relevant first class engineering principles to the data world?

- how do we enable users with different range of technical skills to not only consume but also produce new business logic and data assets?

- how can we unify the business logic generated via code and no-code UI?

- how do we let analysts and data scientists and operators be the best versions of themselves without taking on new roles and responsibilities?