The early data team in any modern org is a Swiss Army knife — doing it all from ingestion to transformations to reporting to deep dives, even the first few predictive models. But as the organization grows, you add talented folks to the various domains — sales, marketing, product, finance, operations — folks who can execute and drive metrics. And within this group invariably emerges a core group of analytically-minded operators, and it is within this core group that you often hear this phrase — “I wish I knew SQL”

In its most benign interpretation, this phrase reflects a desire to control their own destiny. But more often than not, it represents frustration with not being able to operate the business with analytical rigor, having one too many questions unanswered. And the main driver for this — the same data team that was heroic in the early days is now unwittingly a massive bottleneck.

Requests to the data team takes days or weeks (or is never done), and then additional days of back and forth iterating on “this doesn’t look right” — and then the collaborators realize they are answering a different question than was set out to, some fingers get discreetly pointed, finally a decision or two gets made in the “fog of analysis”. And over a drink, a week later, the operator sighs and says “I wish I knew SQL”.

Here’s the thing though — at my prior startup (and I have since seen this in several others), we actually tried investing in teaching SQL to data-savvy operators. But even setting aside how difficult it is for a busy FP&A analyst to take time and learn SQL, the issue is not about learning the syntax of the language.

It’s learning the specific tactics of how to apply it - knowing the tables, the ways in which it needs to be joined, the filters that need to be applied (oh, don’t include those 9 days when event tracking went bust — but ok to include if you are calculating Y vs X!), the sequence of operations that need to be performed — all the nuances in expressing correct business logic on a data swamp with idiosyncrasies and silent pitfalls.

This learning curve is steep, and for 95% of these operators, insurmountable as it’s not just a one-time commitment but ongoing. Skilled data analysts are in fact master artisans, and to expect this skill to be picked up and maintained in a casual part-time capacity is just silly.

Instead of pushing SQL on to these operators, which is a failing tactic, we need to break this repeated cycle of frustration. A core mistake we made in the past 5–7 years, in the first wave of the modern cloud data platforms is to avoid strong business semantic modeling. Let’s just load the data and figure it out, we said.

Over time, all the transformation work gets locked into the hands of the data team, generating a spaghetti mess of SQL or Python code and datasets, but worse, the data team lacks the right building blocks on which they can collaborate and even “hand off” common use cases to business/ops teams .

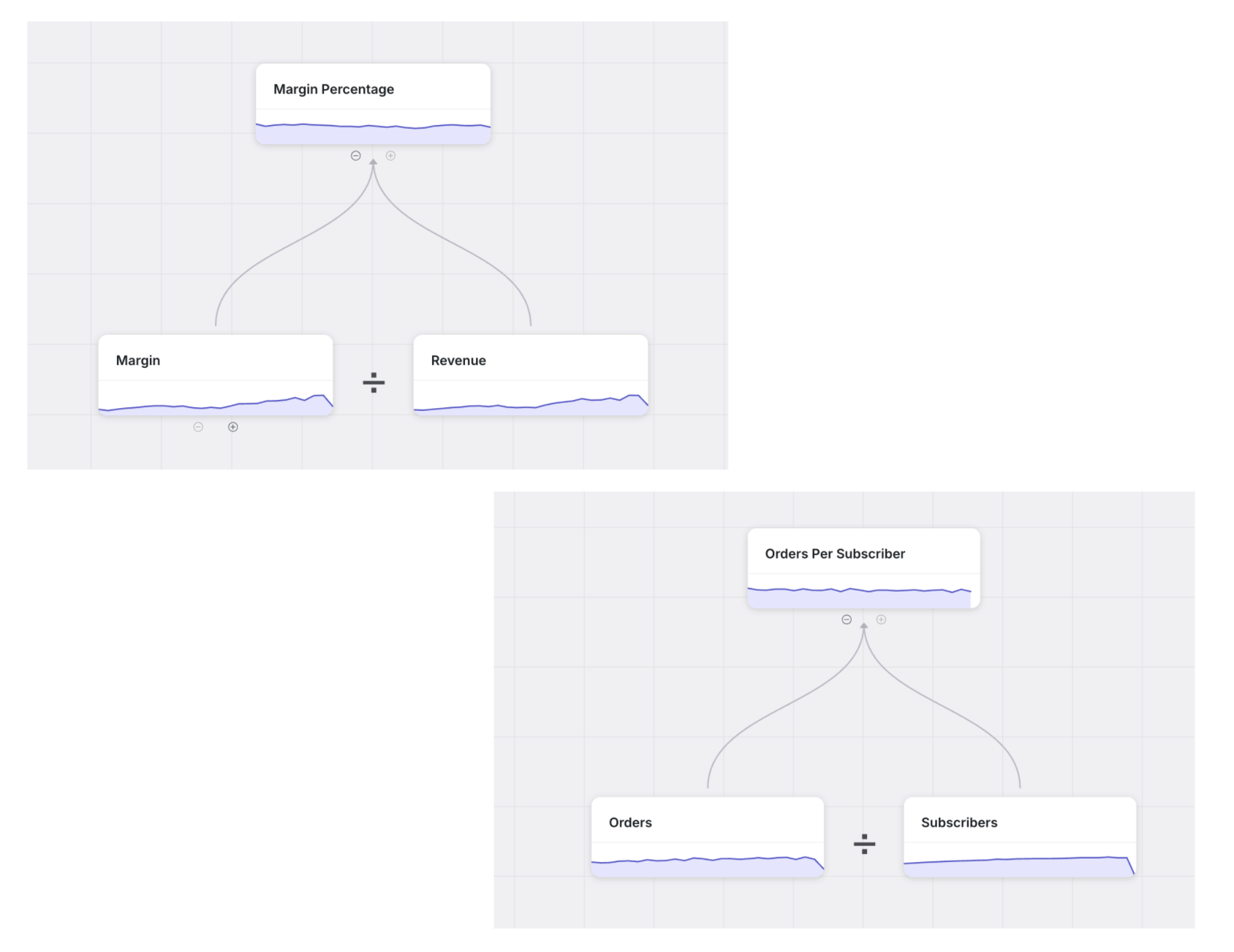

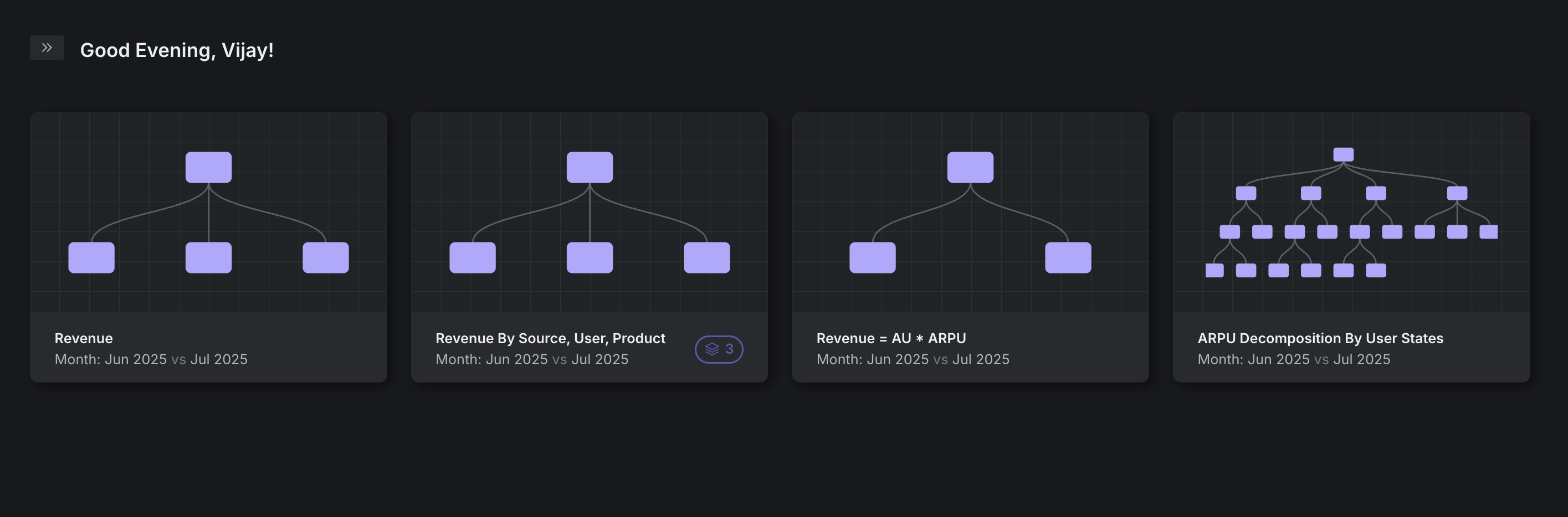

We need to invest in reusable, composable modeling of the business logic over datasets, and explicitly design and shape the interfaces between the data team and the operators. This interface is not sql blocks, it’s not even all datasets — it’s business semantics mapped to the data warehouse: metrics, attributes, entities, relationships, business processes etc.

And applications can then natively exploit the meaning in the business semantics to generate high value data transformations that an operator can self-serve. Let’s not settle for sql and Python editors that are great for bespoke work that get converted to charts. Yes, this works early on when the small number of data folks are heroes shining a light into complete darkness.

But if you want to build a sustainable business, a sustainable organization where analytically minded operators can collaborate seamlessly with the data team, it is critical to build the right abstractions that operators intuitively understand, can self-serve, can compose and act upon.

Because if we do this well, you will never hear another operator go — “I wish I knew SQL”